Latest stories

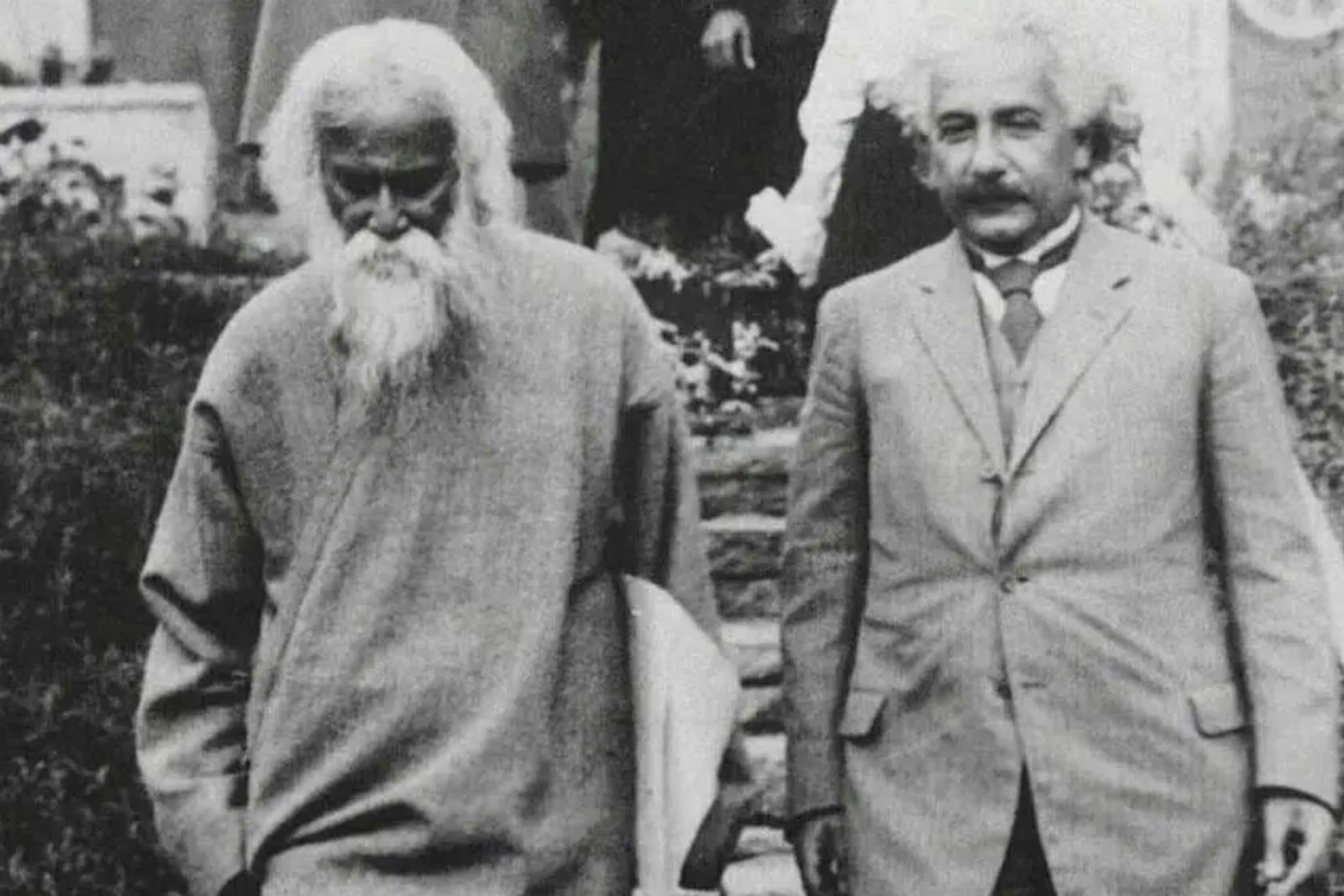

Einstein-Tagore dialogue shines a path for modern leaders

US is no longer the arsenal of democracy

South Korea tries to Trump-proof the alliance In the investigative reports named above, intelligence officers explained how Gospel helped them go“from 50 targets per year” to“100 targets in one day” – and that, at its peak, Lavender managed to“generate 37,000 people as potential human targets.” They also reflected on how using AI cuts down deliberation time:“I would invest 20 seconds for each target at this stage ... I had zero added value as a human ... it saved a lot of time.”

They justified this lack of human oversight in light of a manual check the Israel Defense Forces (IDF) ran on a sample of several hundred targets generated by Lavender in the first weeks of the Gaza conflict, through which a 90% accuracy rate was reportedly established.

While details of this manual check are likely to remain classified, a 10% inaccuracy rate for a system used to make 37,000 life-and-death decisions will inherently result in devastatingly destructive realities.

But importantly, any accuracy rate number that sounds reasonably high makes it more likely that algorithmic targeting will be relied on as it allows trust to be delegated to the AI system. As one IDF officer told +927 magazine:“Because of the scope and magnitude, the protocol was that even if you don't know for sure that the machine is right, you know that statistically it's fine. So you go for it.”

The IDF denied these revelations in an official statement to The Guardian . A spokesperson said that while the IDF does use“information management tools [...] in order to help intelligence analysts to gather and optimally analyse the intelligence, obtained from a variety of sources, it does not use an AI system that identifies terrorist operatives”.

The Guardian has since, however, published a video of a senior official of the Israeli elite intelligence Unit 8200 talking last year about the use of machine learning“magic powder” to help identify Hamas targets in Gaza.

The newspaper has also confirmed that the commander of the same unit wrote in 2021, under a pseudonym, that such AI technologies would resolve the“human bottleneck for both locating the new targets and decision-making to approve the targets.”

Scale of civilian harmAI accelerates the speed of warfare in terms of the number of targets produced and the time to decide on them.

While these systems inherently decrease the ability of humans to control the validity of computer-generated targets, they simultaneously make these decisions appear more objective and statistically correct due to the value that we generally ascribe to computer-based systems and their outcome.

This allows for the further normalization of machine-directed killing, amounting to more violence, not less.

While media reports often focus on the number of casualties, body counts – similar to computer-generated targets – have the tendency to present victims as objects that can be counted. This reinforces a very sterile image of war.

It glosses over the reality of more than 34,000 people dead, 766,000 injured and the destruction of or damage to 60% of Gaza's buildings and the displaced persons, the lack of access to electricity, food, water and medicine.

It fails to emphasise the horrific stories of how these things tend to compound each other. For example, one civilian, Shorouk al-Rantisi , was reportedly found under the rubble after an airstrike on Jabalia refugee camp and had to wait 12 days to be operated on without painkillers and now resides in another refugee camp with no running water to tend to her wounds.

Aside from increasing the speed of targeting and therefore exacerbating the predictable patterns of civilian harm in urban warfare, algorithmic warfare is likely to compound harm in new and under-researched ways. First, as civilians flee their destroyed homes, they frequently change addresses or give their phones to loved ones.

Sign up for one of our free newsletters The Daily ReportStart your day right with Asia Times' top stories AT Weekly ReportA weekly roundup of Asia Times' most-read stories

Such survival behaviour corresponds to what the reports on Lavender say the AI system has been programmed to identify as likely association with Hamas. These civilians, thereby unknowingly, make themselves suspect for lethal targeting.

Beyond targeting, these AI-enabled systems also inform additional forms of violence. An illustrative story is that of the fleeing poet Mosab Abu Toha , who was allegedly arrested and tortured at a military checkpoint.

It was ultimately reported by the New York Times that he, along with hundreds of other Palestinians, was wrongfully identified as Hamas by the IDF's use of AI facial recognition and Google photos.

Over and beyond the deaths, injuries and destruction, these are the compounding effects of algorithmic warfare. It becomes a psychic imprisonment where people know they are under constant surveillance, yet do not know which behavioural or physical“features” will be acted on by the machine.

From our work as analysts of the use of AI in warfare, it is apparent that our focus should not solely be on the technical prowess of AI systems or the figure of the human-in-the-loop as a failsafe.

We must also consider these systems' ability to alter the human-machine-human interactions, where those executing algorithmic violence are merely rubber stamping the output generated by the AI system, and those undergoing the violence are dehumanized in unprecedented ways.

Lauren Gould is Assistant Professor, Conflict Studies, Utrecht University ; Linde Arentze is Researcher into AI and Remote Warfare, NIOD Institute for War, Holocaust and Genocide Studies , and Marijn Hoijtink is Associate Professor in International Relations, University of Antwerp

This article is republished from The Conversation under a Creative Commons license. Read the original article .

Already have an account?Sign in Sign up here to comment on Asia Times stories OR Thank you for registering!

An account was already registered with this email. Please check your inbox for an authentication link.