Nvidia Unveils Generative AI Tools, Simulation And Perception Workflows For Robotics Development Robotics & Automation News

Nvidia has unveiled a range of updates to its offerings to the robotics industry, unveiling them at ROSCon 2024 with the aim of accelerating the development of AI-powered robot arms and autonomous mobile robots expecially.

At ROSCon in Odense, one of Denmark's oldest cities and a hub of automation, Nvidia and its robotics ecosystem partners announced generative AI tools, simulation, and perception workflows for Robot Operating System (ROS) developers.

Among the reveals were new generative AI nodes and workflows for ROS developers deploying to the Nvidia Jetson platform for edge AI and robotics.

Generative AI enables robots to perceive and understand the context of their surroundings, communicate naturally with humans and make adaptive decisions autonomously.

Generative AI comes to ROS communityReMEmbR, built on ROS 2, uses generative AI to enhance robotic reasoning and action. It combines large language models (LLMs), vision language models (VLMs) and retrieval-augmented generation to allow robots to build and query long-term semantic memories and improve their ability to navigate and interact with their environments.

The speech recognition capability is powered by the WhisperTRT ROS 2 node. This node uses Nvidia TensorRT to optimize OpenAI's Whisper model to enable low-latency inference on Nvidia Jetson, resulting in responsive human-robot interaction.

The ROS 2 robots with voice control project uses the Nvidia Riva ASR-TTS service to make robots understand and respond to spoken commands.

The NASA Jet Propulsion Laboratory independently demonstrated ROSA, an AI-powered agent for ROS, operating on its Nebula-SPOT robot and the Nvidia Nova Carter robot in Nvidia Isaac Sim.

At ROSCon, Canonical is demonstrating NanoOWL, a zero-shot object detection model running on the Nvidia Jetson Orin Nano system-on-module. It allows robots to identify a broad range of objects in real time, without relying on predefined categories.

Developers can get started today with ROS 2 Nodes for Generative AI, which brings Nvidia Jetson-optimized LLMs and VLMs to enhance robot capabilities.

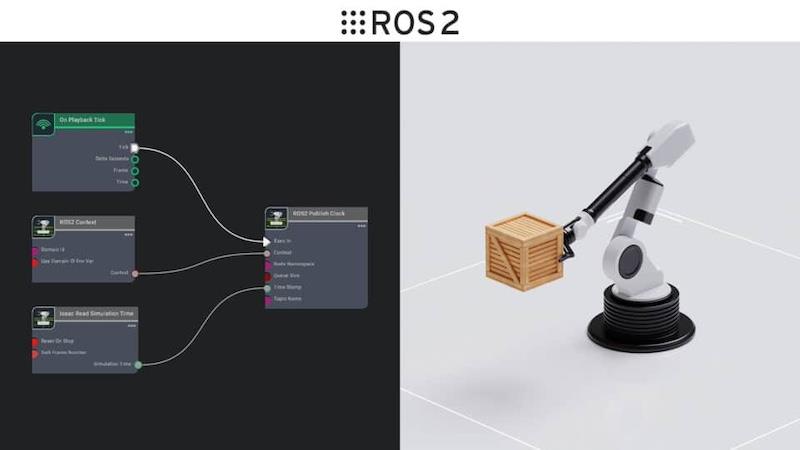

Enhancing ROS workflows with a 'sim-first' approachSimulation is critical to safely test and validate AI-enabled robots before deployment. Nvidia Isaac Sim, a robotics simulation platform built on OpenUSD, provides ROS developers a virtual environment to test robots by easily connecting them to their ROS packages.

A new Beginner's Guide to ROS 2 Workflows With Isaac Sim, which illustrates the end-to-end workflow for robot simulation and testing, is now available.

Foxglove, a member of the Nvidia Inception program for startups, demonstrated an integration that helps developers visualize and debug simulation data in real time using Foxglove's custom extension, built on Isaac Sim.

New capabilities for Isaac ROS 3.2Nvidia Isaac ROS, built on the open-source ROS 2 software framework, is a suite of accelerated computing packages and AI models for robotics development. The upcoming 3.2 release enhances robot perception, manipulation and environment mapping.

Key improvements to Nvidia Isaac Manipulator include new reference workflows that integrate FoundationPose and cuMotion to accelerate development of pick-and-place and object-following pipelines in robotics.

Another is to Nvidia Isaac Perceptor, which features a new visual SLAM reference workflow, enhanced multi-camera detection and 3D reconstruction to improve an autonomous mobile robot's (AMR) environmental awareness and performance in dynamic settings like warehouses

Partners adopting Nvidia IsaacRobotics companies are integrating Nvidia Isaac accelerated libraries and AI models into their platforms.

-

Universal Robots , a Teradyne Robotics company, launched a new AI Accelerator toolkit to enable the development of AI-powered cobot applications.

Miso Robotics is using Isaac ROS to speed up its AI-powered robotic french fry-making Flippy Fry Station and drive advances in efficiency and accuracy in food service automation.

Wheel is partnering with RGo Robotics and Nvidia to create a production-ready AMR using Isaac Perceptor.

Main Street Autonomy is using Isaac Perceptor to streamline sensor calibration.

Orbbec announced its Perceptor Developer Kit, an out-of-the-box AMR solution for Isaac Perceptor.

LIPS has introduced a multi-camera perception devkit for improved AMR navigation.

Canonical highlighted a fully certified Ubuntu environment for ROS developers, offering long-term support out of the box.

Legal Disclaimer:

MENAFN provides the

information “as is” without warranty of any kind. We do not accept

any responsibility or liability for the accuracy, content, images,

videos, licenses, completeness, legality, or reliability of the information

contained in this article. If you have any complaints or copyright

issues related to this article, kindly contact the provider above.

Comments

No comment