The AI Boom May Unleash A Global Surge In Electronic Waste

San Francisco: The Silicon Valley arms race to build more powerful artificial intelligence programs could lead to a massive increase in electronic waste, research published Monday warns.

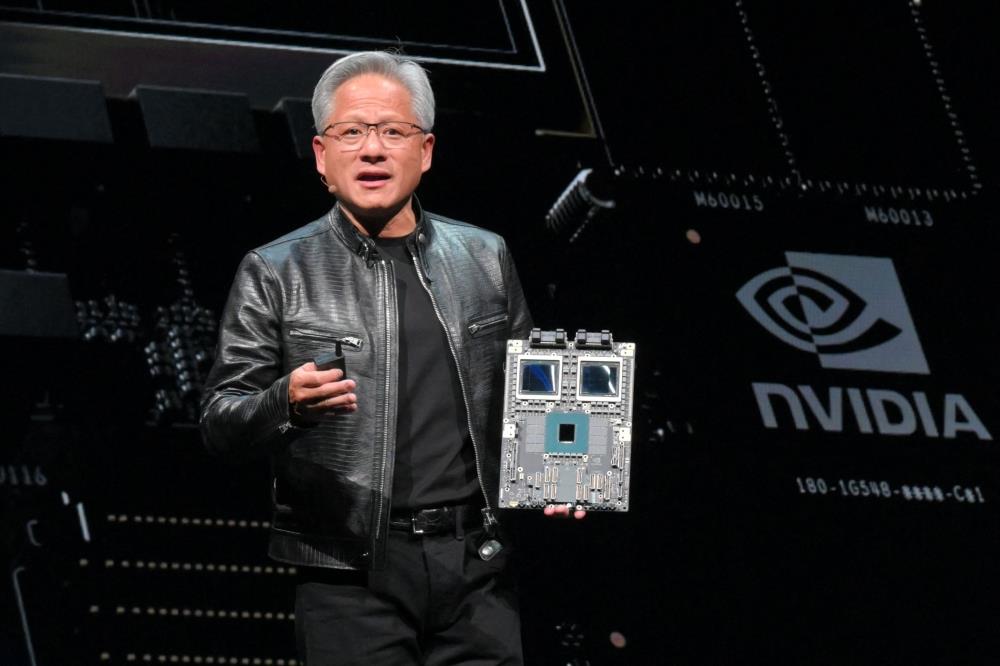

Leading tech companies are spending heavily to build and upgrade data centers to power generative AI projects and to stock them with powerful computer chips.

If the AI boom continues, the older chips and equipment could amount to extra electronic waste equivalent to throwing out 13 billion iPhones annually by 2030, the study from academics in China said.

Tech companies are facing growing scrutiny over the environmental costs of the generative AI captivating investors.

Huge amounts of energy and water are needed to power and cool the powerful computer chips required.

Electronic waste is already a significant and growing global problem.

The vast majority is not recycled, with much of it ending up in landfills, according to an annual United Nations report on e-waste.

Computers and other electronics thrown out in the West are often exported to lower-income countries where people manually break apart old devices to access copper and other metals.

That low-paid labor exposes workers to harmful substances such as mercury and lead, according to the World Health Organization.

In the analysis published Monday, researchers at the Chinese Academy of Sciences predict that the AI boom will increase the total amount of electronic trash generated globally by between 3 percent and 12 percent by 2030 would be as much as 2.5 million metric tons of additional e-waste each year.

Those estimates emerged from considering different scenarios for the intensity of future investment in AI.

The researchers based their calculations on the waste generated when a computer server running on a powerful Nvidia processor called an H100 is thrown out, and they approximated that companies would replace their systems every three years.

The researchers drew on the tech industry rule of thumb known as Moore's Law, which predicts that the number of transistors that can be crammed onto a silicon chip doubles every two years.

The study did not take into account potential waste from disposal of other equipment needed in data centers, such as cooling systems that keep chips from overheating.

"We hope this work brings attention to the often-overlooked environmental impact of AI hardware,” said one of the study's authors.

"AI comes with tangible environmental costs beyond energy consumption and carbon emissions.”

The peer-reviewed study was published Monday in the journal Nature Computational Science.

A spokesperson for Nvidia declined to comment.

The company's 2024 sustainability report said it is working to reduce emissions from its data centers and carefully recycle technology that its employees use.

Developing and deploying the algorithms behind generative AI tools like OpenAI's ChatGPT is significantly more resource intensive than for previous generations of software, requiring more advanced and power-hungry chips.

In July, Google said its carbon emission footprint had increased by 48 percent since 2019.

Microsoft said in May its emissions are up 29 percent since 2020, threatening the company's goal to make its operations carbon negative by 2030.

Growth in data centers is stressing electric grids around the United States and prompting power providers to delay the retirement of some coal plants and reboot old nuclear reactors to feed the energy demand.

Environmental advocates and people who live near proposed data centers have criticized the industry's growing footprint.

Big Tech companies say they are trying to find ways to meet the growing need while limiting the increase of emissions.

But up to now, there has been little study of the potential trash problem created by the AI boom.

"There's so little information on the upstream and downstream impact of AI,” said Sasha Luccioni, an AI researcher who has studied the environmental impacts of the AI boom and works as climate lead at Hugging Face, a company that provides tools for AI developers.

"We should be looking at the whole cycle.”

Luccioni said companies are instead focused mostly on how to amass more computing power to better compete with rivals, leading them to replace still-working computer chips with brand-new ones.

"Everyone is just pursuing this bigger-is-better, faster-is-better phenomenon,” she said. "It's kind of a herd mentality.”

Although some investors on Wall Street and in Silicon Valley have warned it will be difficult for AI to be profitable enough to recoup recent heavy spending on computer hardware, leading AI developers have said they want to spend much more.

Microsoft said this year that its quarterly spending of $14 billion on data centers will continue to grow.

In September, OpenAI CEO Sam Altman presented White House officials an analysis arguing that building several data centers across the nation at a cost of $100 billion for each facility would create jobs and economic growth.

Legal Disclaimer:

MENAFN provides the

information “as is” without warranty of any kind. We do not accept

any responsibility or liability for the accuracy, content, images,

videos, licenses, completeness, legality, or reliability of the information

contained in this article. If you have any complaints or copyright

issues related to this article, kindly contact the provider above.

Comments

No comment