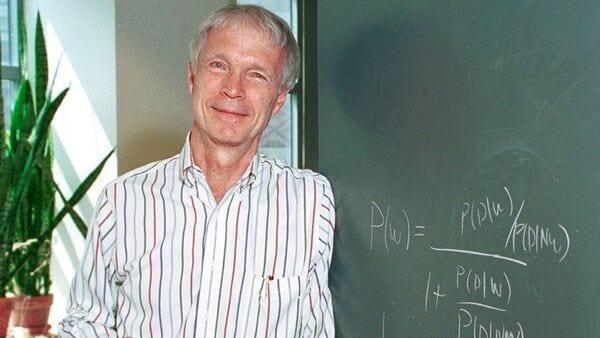

Nobel Physics Prize Winner John Hopfield Calls New AI Advances 'Unnerving', Cautions Against 'Lack Of Understanding'

Hopfield, 91, also cautioned against a "lack" of a deeper understanding of advanced technologies and warned of possible catastrophe if not kept in check, it added.

Hopfield and co-winner Geoffrey Hinton (76) both called for a "deeper understanding of the inner workings of deep-learning systems to prevent them from spiralling out of control", the report said.

Also Read | RBI Monetary Policy Meeting LIVE: Expect status quo on repo rate, stance change 'Rise of Potential Hazardous Tech'Speaking via video to an audience at New Jersey University, Hopfield called nuclear physics and biological engineering as the two "powerful but potentially hazardous technologies" that have emerged during his lifetime.

"One is accustomed to having technologies that are not singularly only good or only bad but have capabilities in both directions. And as a physicist, I'm very unnerved by something which has no control, something which I don't understand well enough so that I can understand what are the limits which one could drive that technology ," he stated.

Also Read | Elon Musk's X resumes operations in Brazil after compliance with Court orders 'AI is Very, Very Unnerving'Hopfield stated that AI is also among the tech where limits and understanding of the tech is important to have control.

"That's the question AI is pushing. Despite modern AI systems appearing to be absolute marvels, there is a lack of understanding about how they function, which is very, very unnerving," the physicist said.

"That's why I myself, and I think Geoffrey Hinton also, would strongly advocate understanding as an essential need of the field, which is going to develop some abilities that are beyond the abilities you can imagine at present," he added.

Also Read | Clues to the future of AI? Deutsche Bank lists THESE five themes to watch"You don't know that the collective properties you began with are actually the collective properties with all the interactions present, and you don't, therefore, know whether some spontaneous but unwanted thing is lying hidden in the works," stressed Hopfield.

Hopfield and Hinton won the joint physics Nobel this year for their pioneering work on the foundations of artificial intelligence. The pair's research on neural networks in the 1980s paved the way for technology that promises to revolutionise society but has also raised apocalyptic fears.

The "Hopfield network", - a theoretical model demonstrating how an artificial neural network can mimic the way biological brains store and retrieve memories. It was the basis for Hinton's“Boltzmann machine”.

Also Read | Samsung's chip technologies fall behind in early innings of AI game 'Godfather of AI' Warns Against TechHopfield's model was improved upon by Hinton, also known as the "Godfather of AI" for his "Boltzmann machine", which paved the way for modern AI applications such as image generators.

Hinton has been critical of AI and at a recent conference at the University of Toronto, where he is professor emeritus, the British-Canadian cautioned of Ai taking control of humans. Hinton quit his job at Google in 2023 to warn of the "profound risks to society and humanity" of the technology.

"I am worried that the overall consequence of this might be systems more intelligent than us that eventually take control," Hinton told reporters after the Nobel announcement.

"If you look around, there are very few examples of more intelligent things being controlled by less intelligent things, which makes you wonder whether when AI gets smarter than us, it's going to take over control," he added.

Hinton said it was impossible to know how to escape catastrophic scenarios at present, "that's why we urgently need more research."

"I'm advocating that our best young researchers, or many of them, should work on AI safety, and governments should force the large companies to provide the computational facilities that they need to do that," he added.

(With inputs from AFP) Legal Disclaimer:

MENAFN provides the

information “as is” without warranty of any kind. We do not accept

any responsibility or liability for the accuracy, content, images,

videos, licenses, completeness, legality, or reliability of the information

contained in this article. If you have any complaints or copyright

issues related to this article, kindly contact the provider above.

Comments

No comment