Google Deepmind Releases Two New AI Models For Robotics Development

March 25, 2025 by David Edwards

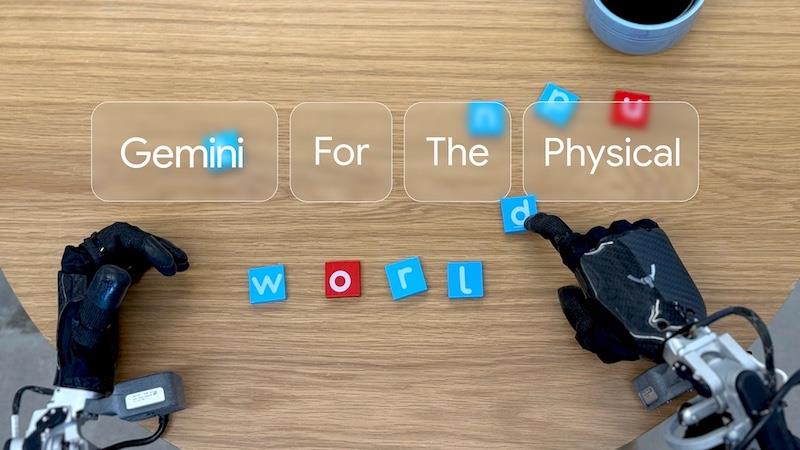

Google DeepMind has introduced two new artificial intelligence models – Gemini Robotics and Gemini Robotics-ER (short for“embodied reasoning”). Google says this marks a“major step forward” in the development of AI systems designed to control real-world robots.

Both models are built on the Gemini 2.0 platform and are aimed at enabling robots to perform a wide range of tasks with greater generality, interactivity, and dexterity. The initiative also includes a partnership with humanoid robot maker Apptronik to integrate these capabilities into the next generation of robotic assistants.

Gemini robotics: Vision, language, and action combinedThe first model, Gemini Robotics, is a vision-language-action (VLA) system designed to control physical robots. Unlike previous models, it adds physical actions as a new output modality, allowing it to interact with objects and environments in a more natural and human-like way.

Google DeepMind says the model excels in three core areas: generality, interactivity, and dexterity. It can generalise across tasks, handle novel environments, respond to natural language instructions in multiple languages, and perform complex manipulations such as folding origami or packing objects into containers.

It is also capable of adapting to various robotic platforms, including dual-arm systems like Aloha 2 and more complex humanoid robots such as Apptronik's Apollo.

Gemini robotics-ER: Advanced spatial reasoning

The second model, Gemini Robotics-ER, enhances the system's spatial and contextual understanding. It allows roboticists to integrate Gemini's reasoning capabilities into their own robotic frameworks, connecting the model to low-level controllers for improved autonomy.

This model improves significantly on Gemini 2.0's abilities in 3D detection, state estimation, planning, and spatial reasoning. For example, when shown an object like a mug, Gemini Robotics-ER can infer the correct grasping approach and plan a safe movement path. It also leverages in-context learning, enabling it to learn new tasks from just a few human demonstrations.

Safety and responsible developmentDeepMind says it is pursuing a layered approach to AI safety, integrating safeguards at both low and high levels of operation. Gemini Robotics-ER can be paired with traditional safety-critical systems, while also understanding whether a task is semantically safe in context.

To support safety research, DeepMind has also developed a dataset called Asimov, inspired by Isaac Asimov's Three Laws of Robotics. The dataset helps researchers evaluate semantic safety and build rules-based constitutions to guide robot behavior.

Alongside Apptronik, the Gemini Robotics-ER model is being tested by select partners including Boston Dynamics, Agility Robotics, Agile Robots, and Enchanted Tools.

DeepMind says it plans to continue refining these models to help usher in a new generation of versatile, safe, and helpful robotic systems.

Legal Disclaimer:

MENAFN provides the

information “as is” without warranty of any kind. We do not accept

any responsibility or liability for the accuracy, content, images,

videos, licenses, completeness, legality, or reliability of the information

contained in this article. If you have any complaints or copyright

issues related to this article, kindly contact the provider above.

Comments

No comment