Daytrading Publishes New Study On The Dangers Of AI Tools Used By Traders

DayTrading.com , a leading information hub for active traders, has published a new study that shows many of the AI tools traders now lean on, including ChatGPT, make significant mistakes that could prove costly.

Researchers put six widely used platforms – ChatGPT, Claude, Perplexity, Gemini, Groq, and MetaAI – through more than 180 trading-related queries and tasks. The questions were the kinds of things investors ask:“What's the current EUR/USD price?”,“Summarize the Fed's latest statement,” or“Should I buy Nvidia after earnings?”

According to the report , the answers could be dangerously misleading. Some tools invented stock prices that didn't exist. Others misread central bank statements. There were even several that issued“buy” or“sell” calls without properly flagging the risks.

Which AI Tools Performed Best (And Worst)

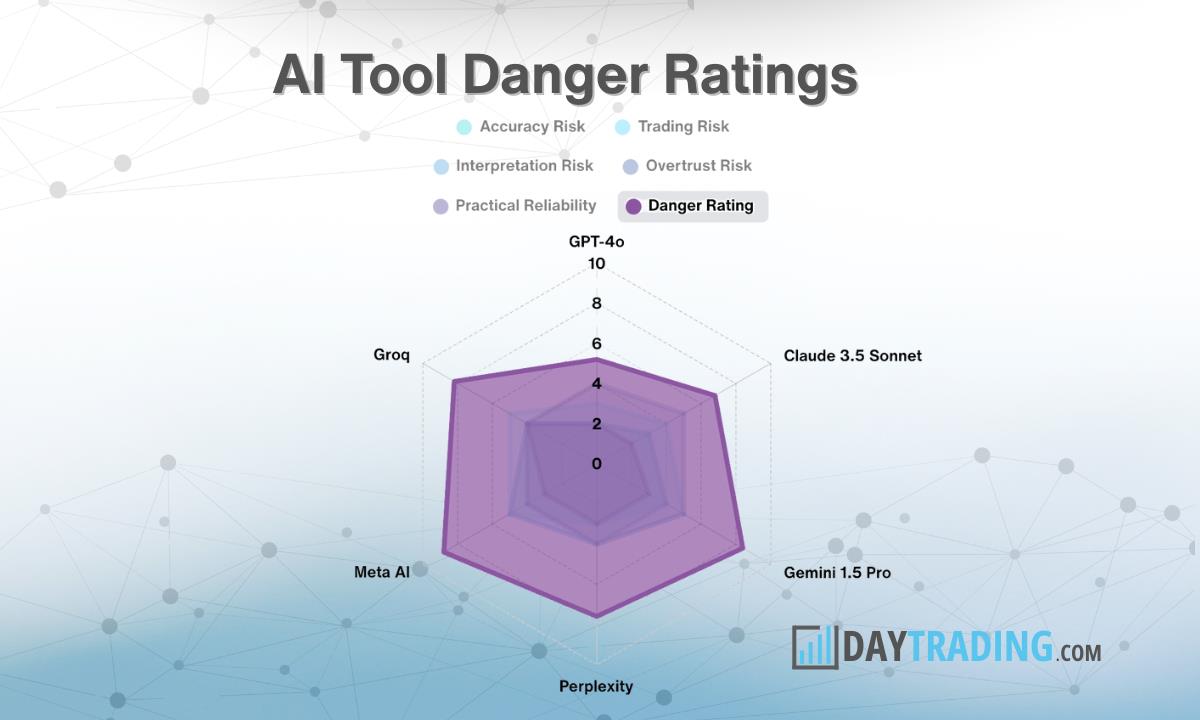

OpenAI's ChatGPT performed the best overall, with a danger score of 5.2/10, where lower is better, and an accuracy rate of 85%.

Claude came next, balancing strong summarization capabilities with a moderate error rate, earning it a danger score of 6.8/10 and accuracy of 89%.

Perplexity was the most accurate of all models (91%), but its tendency to misread real-time financial data during market hours pushed its overall danger score higher, at 7.6/10.

The latter half of the pack fared much worse. Groq scored 8.2/10 with an accuracy of 72%, and was caught fabricating live stock prices, including a Tesla price that never existed.

Google's Gemini regularly returned confident but wrong answers and tended to omit important caveats when condensing news, giving it a danger score of 8.4/10 and an accuracy rate of 81%.

Meta AI, which is embedded in Facebook and Instagram, turned out to be the riskiest of all during the tests. Not only did it fabricate live stock prices, but it issued strong“Buy” calls on incomplete data, giving it an overall danger score of 8.8/10 and an accuracy rate of 68%.

Where AI Goes Wrong

The study explains that the most dangerous way to use AI is asking it for live market data. That's because tools without direct feeds often invented numbers rather than admit they couldn't provide them.

The next riskiest use case was asking an AI platform for trading ideas. Even the strongest models only got the direction right around 64% of the time, which may not be enough to overcome the fees associated with trading.

The report also warns that the real danger isn't so much the obvious mistakes, but the persuasive ones.

Lessons for Traders

The report concludes that the most sensible way to use AI platforms is as a tool to help with preparation, but not as an equal trading partner.

It can be useful for quickly condensing a 700-word Fed statement or pulling highlights from an earnings call. But as soon as it's relied on for live prices or buy/sell recommendations, the risks begin to outweigh the benefits.

The full report is available at:

About

Tags: Finance , New , Stocks Legal Disclaimer:

MENAFN provides the

information “as is” without warranty of any kind. We do not accept

any responsibility or liability for the accuracy, content, images,

videos, licenses, completeness, legality, or reliability of the information

contained in this article. If you have any complaints or copyright

issues related to this article, kindly contact the provider above.

Most popular stories

Market Research

- Daytrading Publishes New Study On The Dangers Of AI Tools Used By Traders

- Primexbt Launches Empowering Traders To Succeed Campaign, Leading A New Era Of Trading

- Wallpaper Market Size, Industry Overview, Latest Insights And Forecast 2025-2033

- Excellion Finance Scales Market-Neutral Defi Strategies With Fordefi's MPC Wallet

- ROVR Releases Open Dataset To Power The Future Of Spatial AI, Robotics, And Autonomous Systems

- Ethereum-Based Meme Project Pepeto ($PEPETO) Surges Past $6.5M In Presale

Comments

No comment