Ethical Considerations Of AI In Autonomous Robots: Bias, Accountability And Societal Impact

August 29, 2025 by Mai Tao

Artificial intelligence is now embedded in robots that are not only assembling cars or moving pallets, but also serving food, delivering parcels, assisting the elderly, and even teaching children.

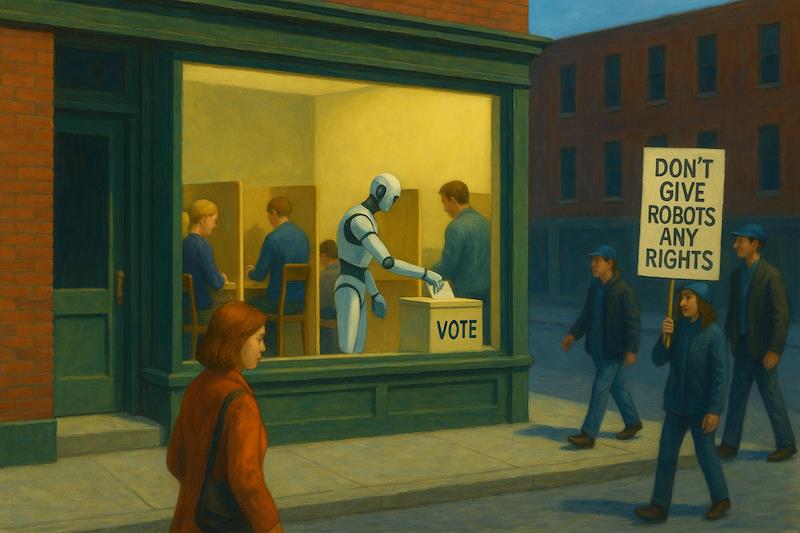

As these systems grow more autonomous, the ethical stakes become unavoidable: what happens when a robot makes a decision that has moral, social, or legal consequences?

Robots powered by advanced AI are no longer passive tools. They perceive, interpret, and act in ways that increasingly resemble human decision-making. That raises pressing concerns about bias, accountability, and the broader societal impact of machines that operate with growing independence.

The debate is no longer confined to research labs or science fiction conventions. Governments, regulators, and industry bodies are all scrambling to define rules of the road, while companies race ahead to capture new markets.

This feature looks at the three key ethical dimensions – bias, accountability, and societal impact – as robots become more intelligent.

Bias in AI-driven robotsBias in AI systems is a well-documented problem, stemming from the data they are trained on and the design choices made by engineers. When these biases are embedded in autonomous robots, they manifest in real-world interactions.

For instance, service robots in hospitals or retail environments might respond differently to people of different genders, ages, or ethnicities. Delivery robots navigating urban areas could be programmed, unintentionally or otherwise, to prioritize certain neighborhoods over others, amplifying existing inequalities.

Scholars have warned that without intervention, AI bias could harden into physical systems. The European Parliament's Special Committee on Artificial Intelligence has said:“Bias in algorithms, if left unchecked, can lead to discrimination and exclusion in automated decision-making processes.”

Solutions being developed include transparency in training datasets, independent auditing of robotic systems, and standardized ethical design frameworks. The IEEE, for example, has published Ethically Aligned Design, a set of guidelines intended to help engineers build systems that prioritize human values.

Accountability and responsibilityThe“accountability gap” is one of the most hotly debated issues in robotics ethics. If an autonomous robot causes harm, who is responsible? The company that designed the hardware, the developers who built the AI, the operator who deployed it, or the end-user?

Incidents in the automotive and aviation industries show how messy accountability can be when software malfunctions. Tesla has faced regulatory scrutiny over its Autopilot system after several high-profile crashes.

In aviation, Boeing's 737 MAX crisis demonstrated how automation errors can quickly escalate into global safety concerns.

The legal frameworks remain under development. In the US, liability laws tend to hold manufacturers and operators responsible, while in Europe, discussions are advancing around creating an AI liability directive. The European Commission has stated:“Clear rules on liability are needed to ensure trust and uptake of AI-based products.”

Some researchers have floated the idea of granting legal personhood to AI systems, allowing them to be held responsible in limited ways. But industry consensus is that accountability must remain with humans – either corporate or individual.

Robotics companies are experimenting with approaches such as insurance-backed deployments, public safety pledges, and transparency reports to reassure regulators and customers alike.

Societal impact of intelligent robotsThe broader societal consequences of autonomous robots extend far beyond technical glitches.

Employment is one of the most visible areas. Humanoids, warehouse robots, and autonomous vehicles all promise efficiency but raise fears of large-scale labor displacement.

While proponents argue that robots free humans from repetitive or dangerous tasks, critics warn of widening economic inequality if reskilling programs lag behind automation adoption.

Social relationships are another area of concern. Care robots in nursing homes or companion robots for children may reduce loneliness, but could also lead to over-dependence on machines for emotional support.

Cultural attitudes toward robots vary widely – while Japan has been generally receptive, Western societies often express more suspicion, shaped by decades of dystopian science fiction narratives.

Global inequalities are also at stake. Wealthy nations with advanced robotics ecosystems may capture the lion's share of the benefits, while lower-income countries risk being left behind in a two-speed technological world.

Emerging frameworks and proposed solutionsDespite the challenges, efforts are under way to embed ethics into AI and robotics.

The European Union's AI Act, currently advancing through the legislative process, seeks to classify AI systems by risk and enforce obligations on developers. UNESCO has published global recommendations on AI ethics, adopted by almost 200 countries. IEEE's Ethically Aligned Design continues to influence engineers worldwide.

On the industry side, leading robotics firms are introducing their own voluntary principles. Boston Dynamics, for example, published an open letter pledging not to weaponize its robots. Tesla and Figure AI frequently emphasize safety-first design in their public communications.

The balance between regulation and innovation remains delicate. Overregulation could stifle breakthroughs, while underregulation risks public backlash and accidents that damage trust.

A growing chorus of experts is calling for“ethical audits” of AI systems, greater transparency of training data, and hybrid human-AI decision frameworks to ensure that humans remain in the loop.

The path forwardAs robots become more intelligent and autonomous, the ethical challenges of bias, accountability, and societal impact move from abstract thought experiments to urgent, practical concerns.

The path forward will require collaboration between governments, companies, and civil society to ensure that robots are deployed in ways that are fair, transparent, and aligned with human values.

The question is not whether autonomous robots will enter our daily lives – that process is already under way. The real question is whether society can ensure that their intelligence serves everyone, rather than a select few.

Legal Disclaimer:

MENAFN provides the

information “as is” without warranty of any kind. We do not accept

any responsibility or liability for the accuracy, content, images,

videos, licenses, completeness, legality, or reliability of the information

contained in this article. If you have any complaints or copyright

issues related to this article, kindly contact the provider above.

Comments

No comment